January 14, 2008

Shared, err, something

From (the otherwise great book) Advanced Rails, under Ch. 10, "Rails Deployment"...

"The canonical Rails answer to the scalability question is shared-nothing (which really means shared-database): design the system so that nearly any bottleneck can be removed by adding hardware."

Nonsensical, but cute.

This seems like a classic case of Semantic Diffusion. It's funny how people find a buzzword, and latch onto it, while continuing to do what they always did. "We're agile because we budget no time for design" -- "We're REST because we use HTTP GET for all of our operations" -- "We're shared nothing because we can scale one dimension of our app, pay no attention to the shared database behind the curtain, that's a necessary evil".

A shared nothing architecture would imply:

- each Mongrel has its own Rails deployment with its own database

- that database had a subset of the total application's data

- some prior node made the decision on how to route the request.

...And we don't always do this because some domains are not easily partitionable, and even so, you get into CAP tradeoffs wherein our predominant model of a highly available and consistent world is ruined.

Now, I know that some would ask "what about caches?". The "popular" shared-something architecture of most large scale apps seem to imply:

- each app server has its own cache fragment

- replicas might be spread across the cache for fault tolerance

- the distributed cache handles 99% of requests

- what few writes we have trickle to a shared database ( maybe asynchronously)

Which does help tremendously if you have a "read mostly" application, though it doesn't help reduce the scaling costs of shared writes. Good for web apps, but from what I've seen (outside of brokerages) this has not caught on in the enterprise as broadly as one would hope, except as an "oh shit!" afterthought. Hopefully that will change, where appropriate, but recognize that these caches, whether memcached, or Tangosol, or Gigaspaces, or Real Application Clusters are about making "shared write" scalability possible beyond where it was in the past; it doesn't mean you're going to scale the way Google does.

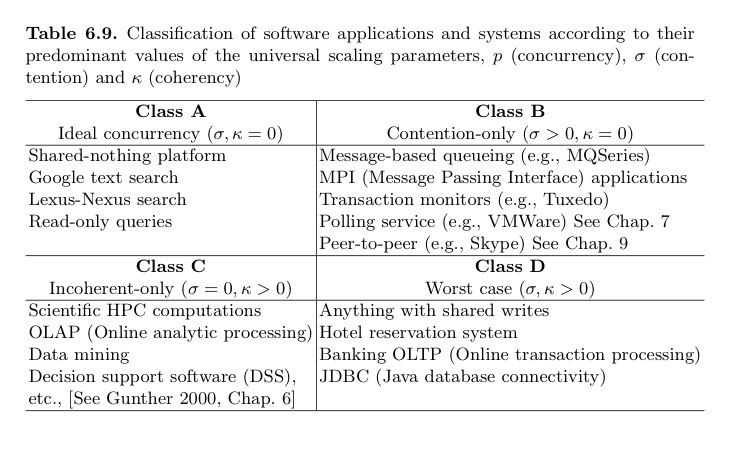

Here's one of Neil Gunther's graphics that shows software scalability tradeoffs based on your data's potential of contention, or your architecture's coherency overhead:

The universal scalability equation is:

C(N) = | N 1 + σN + κN (N − 1) |

Where, for software scale, N is the number of active threads/processes in your app server, σ is the data contention parameter, and κ is the cache coherency-delay parameter. Read the Guerilla Capacity Planning Manual for more details, or pick up his book.

I like this model, but there are some caveats: Firstly, I don't quite understand why Tuxedo is in Class B, yet OLTP is in Class D. Secondly, Class D's examples are so high-level that they may be misleading. The real problem here is "shared writes", which can be further broken down into a) "hotspots", i.e. a record that everyone wants to update concurrently, and b) limited write volumes due to transaction commits needing durability.

Having said this, this model shows the fundamental difference between "Shared-Nothing" and our multi-node, distributed-cache "Shared-Something". Shared-nothing architectures are those that have near-zero contention or coherency costs. Whereas shared-something is about providing systems that enhance the coherency & contention delays for Class D software, but doesn't eliminate them. They're helping the underlying hardware scalability, but not changing the nature of the software itself.

For example, write-through caching, whether in Tangosol or in a SAN array's cache, for example, can help raise commit volumes. Oracle RAC has one Tlog per cluster node, also potentially raising volumes. Networked cache coherency eliminates disk latency. But the important thing to recognize is that the nature of the software hasn't changed, we've just pushed out the scaling asymptote for certain workloads.

Anyway, let's please call a spade a spade, mm'kay? I just don't like muddied waters, this stuff is hard enough as it is....