January 30, 2008

Relations in the cloud

I've been hearing a lot about how the RDBMS are no longer appropriate for data management on the Web. I'm curious about this.

Future users of megadata should be protected from having to know how the data is organized in the computing cloud. A prompting service which supplies such information is not a satisfactory solution.Activities of users through web browsers and most application programs

should remain unaffected when the internal representation of data is changed and even when some aspects of the external representation are changed. Changes in data representation will often be needed as a result of changes in query, update, and report traffic and natural growth in the types of stored information.

I didn't write the above, it was (mostly) said 38 years ago. I think the arguments still hold up. Sure, Google and Yahoo! make do with their custom database. But, are these general-purpose? Do they suffer from the same problems of prior data stores in the 60's?

Certainly there's a balance of transparency vs. abstraction here that we need to consider: does a network-based data grid make a logical view of data impossible due to inherent limitations of distribution?

I'm not so sure. To me this is just a matter of adjusting one's data design to incorporate estimates, defaults, or dynamically assessed values when portions of the data are unavailable or inconsistent. If we don't preserve logical relationships in as simple a way as possible, aren't we just making our lives more complicated and our systems more brittle?

I do agree that there's a lot to be said about throwing out the classic RDBMS implementation assumptions of N=1 data sets, ACID constraints at all times, etc.

I do not agree that it's time to throw out the Relational model. It would be like saying "we need to throw out this so-called 'logic' to get any real work done around here".

There is a fad afoot that "everything that Amazon, Google, eBay, Yahoo!, SixApart, etc. does is goodness". I think there is a lot of merit in studying their approaches to scaling questions, but I'm not sure their solutions are always general purpose.

For example, eBay doesn't enable referential integrity in the database, or use transactions - they handle it all in the application layer. But, that doesn't always seem right to me. I've seen cases where serious mistakes were made in the object model because the integrity constraints weren't well thought out. Yes, it may be what was necessary at eBay's scale due to the limits of the Oracle's implementation of these things, but is this what everyone should do? Would it not be better long-term if we improved the underlying data management platform? I'm concerned to see a lot of people talking about custom-integrity, denormalization, and custom-consistency code as a pillar of the new reality of life in the cloud instead of a temporary aberration while we shift our data management systems to this new grid/cloud-focused physical architecture. Or perhaps this is all they've known, and the database never actually enforced anything for them. I recall back in 1997, a room full of AS/400 developers were being introduced to this new, crazy "automated referential integrity" idea, so it's not obvious to everyone.

The big problem is that inconsistency speeds data decay. Increasingly poor quality data leads to lost opportunities and poor customer satisfaction. I hope people remember that the key word in eventual consistency is eventual. Not some kind of caricatured "you can't be consistent if you hope to scale" argument.

Perhaps this is just due to historical misunderstanding. The performance of de-normalization and avoiding joins has nothing to do with the model itself, it has to do with the way the physical databases have been traditionally constrained. On the bright side, column-oriented stores are becoming more popular, so perhaps we're on the cusp of a wave of innovation in how flexible the underlying physical structure is.

I also fear there's a just widespread disdain for mathematical logic among programmers. Without a math background, it takes a long time for one to understand set theory + FOL and relate it to how SQL works, so most just use it as a dumb bit store. The Semantic Web provides hope that the Relational Model will live on in some form, though many still find it scary.

In any case, I think there are many years of debate ahead as to the complexities and architecture of data management in the cloud. It's not as easy as some currently seem to think.

January 14, 2008

Shared, err, something

From (the otherwise great book) Advanced Rails, under Ch. 10, "Rails Deployment"...

"The canonical Rails answer to the scalability question is shared-nothing (which really means shared-database): design the system so that nearly any bottleneck can be removed by adding hardware."

Nonsensical, but cute.

This seems like a classic case of Semantic Diffusion. It's funny how people find a buzzword, and latch onto it, while continuing to do what they always did. "We're agile because we budget no time for design" -- "We're REST because we use HTTP GET for all of our operations" -- "We're shared nothing because we can scale one dimension of our app, pay no attention to the shared database behind the curtain, that's a necessary evil".

A shared nothing architecture would imply:

- each Mongrel has its own Rails deployment with its own database

- that database had a subset of the total application's data

- some prior node made the decision on how to route the request.

...And we don't always do this because some domains are not easily partitionable, and even so, you get into CAP tradeoffs wherein our predominant model of a highly available and consistent world is ruined.

Now, I know that some would ask "what about caches?". The "popular" shared-something architecture of most large scale apps seem to imply:

- each app server has its own cache fragment

- replicas might be spread across the cache for fault tolerance

- the distributed cache handles 99% of requests

- what few writes we have trickle to a shared database ( maybe asynchronously)

Which does help tremendously if you have a "read mostly" application, though it doesn't help reduce the scaling costs of shared writes. Good for web apps, but from what I've seen (outside of brokerages) this has not caught on in the enterprise as broadly as one would hope, except as an "oh shit!" afterthought. Hopefully that will change, where appropriate, but recognize that these caches, whether memcached, or Tangosol, or Gigaspaces, or Real Application Clusters are about making "shared write" scalability possible beyond where it was in the past; it doesn't mean you're going to scale the way Google does.

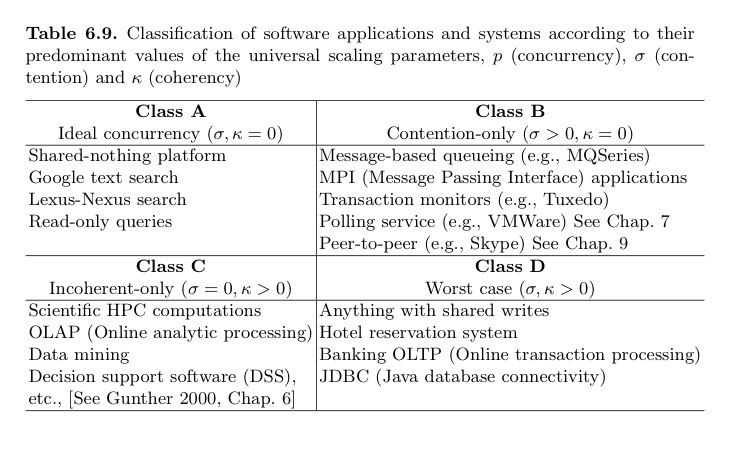

Here's one of Neil Gunther's graphics that shows software scalability tradeoffs based on your data's potential of contention, or your architecture's coherency overhead:

The universal scalability equation is:

C(N) = | N 1 + σN + κN (N − 1) |

Where, for software scale, N is the number of active threads/processes in your app server, σ is the data contention parameter, and κ is the cache coherency-delay parameter. Read the Guerilla Capacity Planning Manual for more details, or pick up his book.

I like this model, but there are some caveats: Firstly, I don't quite understand why Tuxedo is in Class B, yet OLTP is in Class D. Secondly, Class D's examples are so high-level that they may be misleading. The real problem here is "shared writes", which can be further broken down into a) "hotspots", i.e. a record that everyone wants to update concurrently, and b) limited write volumes due to transaction commits needing durability.

Having said this, this model shows the fundamental difference between "Shared-Nothing" and our multi-node, distributed-cache "Shared-Something". Shared-nothing architectures are those that have near-zero contention or coherency costs. Whereas shared-something is about providing systems that enhance the coherency & contention delays for Class D software, but doesn't eliminate them. They're helping the underlying hardware scalability, but not changing the nature of the software itself.

For example, write-through caching, whether in Tangosol or in a SAN array's cache, for example, can help raise commit volumes. Oracle RAC has one Tlog per cluster node, also potentially raising volumes. Networked cache coherency eliminates disk latency. But the important thing to recognize is that the nature of the software hasn't changed, we've just pushed out the scaling asymptote for certain workloads.

Anyway, let's please call a spade a spade, mm'kay? I just don't like muddied waters, this stuff is hard enough as it is....

January 03, 2008

The good in WS-*

Ganesh:Believe me, it would greatly clear the air if a REST advocate sat down and listed out things in SOAP/WS-* that were “good” and worth adopting by REST. It would not weaken the argument for REST one bit, and it would reassure non-partisans like myself that there are reasonable people on both sides of the debate.I'll bite. I'll look at what I think are "good", what the improvements could be in a RESTful world, and what's actually happening today. My opinions only, of course. I will refrain from discussing those specs I think are bad or ugly.

The good:

WS-Security, WS-Trust, and WS-SecureConversation

What's good about them?

- They raise security to the application layer. Security is an end-to-end consideration, it's necessarily incomplete at lower levels.

- Message-level security enhances visibility. Visibility is one of REST's key design goals. REST should adopt a technology to address this.

- It's tied to XML. All non-XML data must be wired through the XML InfoSet. XML Canonicalisation sucks.

- WS-Security itself does not use derived keys, and is thus not very secure. Hence, WS-SecureConversation. But that's not well supported.

- WS-Trust arguably overlaps with some other popular specs. Some OASIS ratified specs, like WS-SecureConversation, rely on WS-Trust, which is still a draft.

- For WS-Trust and WS-SC, compatibility with only one reference implementation is what vendors tend to test. Compatibility with others: "Here be dragons".

- SixApart has mapped the WSSE header into an HTTP header

- We could use S/MIME. There are problems with that, but there is still reason to explore this. See OpenID Data Transport Protocol Draft (key discovery, and messages) for examples of how this would work.

- One challenge that I have not seen addressed yet in the REST world is the use of derived keys in securing messages. WS-Security has this problem: reusing the same asymmetric key for encryption is both computationally expensive and a security risk. WS-SecureConversation was introduced to fix this and make WS-Security work more like SSL, just at the message level. SSL works by using derived keys: the asymmetric key is used during handshake to derive a symmetric cryptographic key, which is less expensive to use.

I recall Rich Salz, almost 3 years ago, claiming that an SSL-like protocol (like WS-SecureConversation) could not be RESTful because it has state. This isn't entirely true; authorization schemes like HTTP digest require server-side state maintenance (the nonce cache) and we don't seem to complain that this breaks HTTP. (Digest interoperability itself is often broken, but that's another story). REST stores state in two spots: (a) on the client, and (b) in resources. So, the answer seems to be, ensure the derived key (and metadata) is identified by a URI, and include a URI in the HTTP header to identify the security context. Trusted intermediaries that would like to understand the derived keys could HTTP GET that URI and cache the result. You'd probably have to use an alternate authentication mechanism (HTTP Basic over SSL, for example) to bootstrap this, but that seems reasonable. I'd like to see the OpenID Data Transport Protocol Service Key Discovery head in this direction.

WS-Coordination and WS-AtomicTransaction

What's good about them?

- Volatile or Durable two-phase commit. It works across a surprising number of App servers and TP monitors, including CICS, Microsoft-DTC (WCF), and J2EE app servers like Glassfish or JBoss. It will be very useful to smooth interoperability among them.

- It needs more widespread deployment. People are making do (painstakingly) with language-level XA drivers when they need 2PC across environments, so it may take a while for WS-AT to gain traction.

- Most of my problems with WS-AT are problems that apply equally to other 2PC protocols. I list them here because they will become "promoted" in importance now that the vendor interoperability issues have been solved with WS-AT.

- Isolation levels & boundaries. As I've mentioned in my brief exchange with Mark Little (and I'm sorry I didn't continue the thread), I think there will be lurking interoperability and performance problems. For example, isolation boundaries are basically up to the application, and thus will be different for every service interface. Like XA, the default isolation for good interop will likely be "fully serializable" isolation, though it's not clear that a client can assume that _all_ data in a SOAP body would have this property, as there might be some transient data.

- Latency. Like any 2PC protocol, WS-AT is only viable in a low-latency environment like an intranet, and specific data items cannot require a high volume of updates. A typical end-to-end transaction completion involving two services will require at minimum 3 to 4 round-trips among the services. For example, given Service A is the transaction initiator and also is colocated with the coordinator, we have the following round trips: 1 for tx register, 1 for a 'read' action, 1 for a 'write' action, and 1 for prepare. If your write action can take advantage of a field call, you could reduce this to 3 round trips by eliminating the read. The number of trips will grow very fast if you have transaction initiators and coordinators that are remote to one of the participating services, or if you start mixing in multiple types of coordinators, such as WS-BusinessActivity.

Here is a latency-focused "when distributed transactions are an option" rule of thumb: be sure any single piece of data does not require transactionally consistent access (read OR write!) any quicker than ( 1 / N*d + c ) per second, where N = number of network trips required for a global transaction completion, d is the average latency between services in seconds, and c is the constant overhead for CPU usage and log disk I/O (a log write is usually required for each written-to service + the coordinator). If you exceed this rate, distributed transactions will hurt your ability to keep up. This rule does not account for failures & recovery, so, adjust for MTTF and MTTR...

An example best case: In a private LAN environment with- 0.5ms network latency (i.e. unsaturated GigE)

- "write only" transaction (3 trips) from Service A to Service B

- a "c" of 3 disks (coordinator, service 1, service 2) with 1 ms log write latency (which assumes a very fast write-cached disk!)

- Availability. Again, this isn't really WS-AT's fault, as this problem existed in COM+ and EJB before it, but WS-AT's potential success would catapult this into the limelight. Here's the sitch: Normally, if you enroll a database or a queue into a 2PC, it knows something about the data you're accessing, so it can make some good decisions about balancing isolation, consistency, and availability. For example, it may use "row locks", which are far granular than "table locks". Some also have "range locks" to isolate larger subsets of data. The component framework usually delegates to the database to handle this, as the component itself knows nothing about data and is usually way too coarse grained to exclusively lock without a massive impact on data availability.

In WS-land, a similar situation is going to occur. WS stacks tend to know very little about data granularity & locking, while DBMS do. So, most will rely on the DBMS. Yet relying on the DBMS to handle locks will defeat a lot of service-layer performance optimizations (like caching intermediaries, etc.), relegating most services to the equivalent of stateless session beans with angle brackets. This doesn't seem to be about what SOA is about. So, what's the improvement I'm suggesting here? Service frameworks need to become smarter in terms of understanding & describing data set boundaries. RESTful HTTP doesn't provide all the answers here, but it does help the caching & locking problem with URIs and ETags w/ Conditional-PUT and Conditional-GET.

- Firstly, there's the question of whether it's possible to have ACID properties across a uniform interface. The answer to me is: sure, if you own all the resources, and you don't care there is no standard. With standard HTTP/HTML hypermedia, one just has to bake support into their application using PUT/POST actions for boundaries. Picture any website with an "edit mode" with undo or confirm, and you've pretty much enabled the ACID properties. Unfortunately, each site would have a non-standard set of conventions to enable this, which hurts visibility.

- Enabling a standard (visible) protocol for REST across different resources might be possible; Rohit has sketched this out in his thesis for 2-way agreements (i.e. the REST+D style), which is effectively a one-phase commit, and for N-way resource replicas (i.e. the ARREST+D style), and he also showed how the implementation would fit into the current Web architecture. We're already seeing his work popularized. Anyway, for a distributed commit, one possibly could extend the MutexLock gateway to support snapshot isolation, and also act as a coordinator (moving this to a two-phase protocol). But the caveats above apply -- this would only be useful for REST inside an intranet and for data that is not very hot. You still would require a Web of Trust across all participants -- downtime or heuristic errors would lock all participating resources from future updates.

WS-Choreography Description Language

What's good about it?

- It's an attempt to describe functional contracts among a set of participants. This allows for bi-simulation to verify variance from the contract at runtime. Think of it like a way to describe expected sequences, choices, assertions, pre & post-conditions for concurrent interactions.

- I think that the world of computing gradually will shift to interaction machines as a complement to Turing machines, but this is going to take time. WS-CDL is very forward thinking, dealing with a topic that is just leaving the halls of academia. It may have been premature to make a spec out of this, before (complete) products exist.

- See this article for some interesting drawbacks to the current state of WS-CDL 1.0.

- WS-CDL is tightly coupled to WSDL and XSDs. It almost completely ignores Webarch.

- Not much, that I'm aware of.

Security Assertions Markup Language (SAML)

What's good?

- Federated security assertions for both web SSO and service-to-service invocations.

- Trust models based on cryptographic trust systems such as Kerberos or PKI.

- Both open source implementations and vendor implementations.

- It doesn't have a profile to take advantage of HTTP's Authorization mechanism; this is because browsers don't allow extensibility there. It's not a deal-breaker, it's a smell that goes beyond SAML (browsers haven't changed much since Netscape's decisions in the 90's).

- It assumes authentication is done once, and then stored in a cookie or a session. To be RESTful, it should be either asserted on each request, or stored in a resource, and the URI should be noted in an HTTP header or in the body as the reference to the assertion (similar to OpenID).

- While the actual Browser profiles are generally RESTful, the API for querying attributes, etc. is based on SOAP.

- SAML over SSL is easy to understand. SAML over XML Signature and Encryption is a bitch to understand (especially holder-of-key).

- It is a bit heavyweight. Assertions contain metadata that's often duplicated elsewhere (such as your transport headers).

- There are several different identity & attribute formats that it supports (UUID, DCE PAC, X.500/LDAP, etc.). Mapping across identifiers may be useful inside an enterprise, but it won't scale as well as a uniform identifier.

- OpenID 2.0. It doesn't cover everything, there's questions about phishing abuse, but it's probably good enough. SAML is a clear influence here. The major difference is that it uses HTTP URIs for identity, whereas SAML uses any string format that an IdP picks (there are several available).

The questionable:

WS Business Process Execution Language (WS-BPEL)

What's good?

- Raising the abstraction bar for a domain language specifying sequential processes.

- It's more focused on programmers (and hence, vendors selling programmer tools) than on the problem space of BPM and Workflow.

- It relies on a central orchestrator, and thus seems rather like a programming language in XML.

- Very XML focused; binding to specific languages requires a container-specific extension like Apache WSIF or JCA or SCA or ....

- BPEL4People and WS-HumanTask are a work in progress. Considering the vast majority of business processes involve people, I'd say this is a glaring limitation.

- BPEL treats data as messages, not as data that has identity, provenance, quality, reputation, etc.

- I think there is a big opportunity for a standard human tasklist media type. I haven't scoured around the internet for this, if anyone knows of one, please let me know. This would be a win for several communities: the BPM community today has no real standard, and neither does the REST community. The problem is pretty similar whether you're doing human tasks for a call center or for a social network, whether social or enterprise. Look at Facebook notifications as a hint. Semantics might include "activity", "next steps", "assignment", etc. One could map the result into a microformat, and then we'd have Facebook-like mini-feeds and notifications without the garden wall.

- As for a "process execution language" in the REST world, I think, if any, it probably would be a form of choreography, since state transitions occur through networked hypermedia, not a centrally specified orchestrator.

Other questionables include SOAP mustUnderstand, WS-ReliableMessaging and WS-Policy. But I don't really have much to say about them that others haven't already.

Phew! Wall of text crits you for 3831. So much for being brief...